On this week’s episode of The Dose, host Joel Bervell speaks with Dr. Ziad Obermeyer, from the UC Berkeley School of Public Health, about the potential of AI to inform health outcomes — for better and for worse.

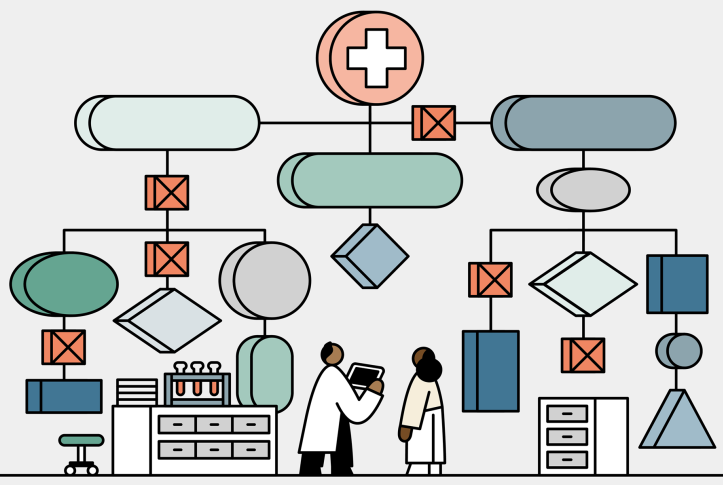

Obermeyer is the author of groundbreaking research on algorithms, which are used on a massive scale in health care systems — for instance, to predict who is likely to get sick and then to direct resources to those populations. But they can also entrench racism and inequality in the system.

“We’ve accumulated so much data in our electronic medical records, in our insurance claims, in lots of other parts of society, and that’s really powerful,” Obermeyer says. “But if we aren’t super careful in what lessons we learn from that history, we’re going to teach algorithms bad lessons, too.”

Transcript

JOEL BERVELL: Welcome back to The Dose. On today’s episode, we’re going to talk about the potential of machine learning in informing health outcomes, for better and for worse, with my guest Dr. Ziad Obermeyer. Dr. Obermeyer is an associate professor of health policy and management at the UC Berkeley School of Public Health, where he does research at the intersection of machine learning, medicine, and health policy.

He previously was an assistant professor at Harvard Medical School, and he continues to practice emergency medicine in underserved parts of the United States. Prior to his career in medicine, Dr. Obermeyer worked as a consultant to pharmaceutical and global health clients at McKinsey and Company. His work has been published in Science, Nature, the New England Journal of Medicine, JAMA, among others, and he’s a cofounder of two tech companies focused on data-informed health care.

Dr. Ziad Obermeyer, thank you so much for joining me on The Dose.

ZIAD OBERMEYER: It’s such a pleasure.

JOEL BERVELL: So before we dive in, here’s the reason why I invited Dr. Obermeyer for this conversation. His work is groundbreaking. I remember reading one of your articles in the journal Science. And it blew my mind. The paper showed that the prediction algorithms that health systems use to identify complex health needs exhibited significant racial bias. At a given risk score, Black patients were considerably sicker than white patients. Remedying the disparity would’ve increased the percentage of Black patients that had received additional help from 17.7 to 46.5 percent.

So we know that’s the math and that’s the bias. While Dr. Obermeyer has researched algorithms like that one that are biased, he’s also working on understanding how to correct those biases. And I’d venture to guess that he’s a little bit optimistic, but I’ll let you talk a little bit more about that. Algorithms can’t change history, but they can significantly shape the future for better or for worse. So as we dive in, the first question, Dr. Obermeyer, is I’m just hoping you given our listeners an overall understanding of why and how algorithms became a part of the health care equation.

ZIAD OBERMEYER: Yeah. Thanks for that very kind introduction. And I really like the way you put it, that they can’t change history — they learn from history. But the way we choose to use them, I think, can either have a very positive effect on the future, or it can just reinforce all of the things we don’t like about our history and the history of our country and the health care system.

So I think whether we like it or not, algorithms are already being used at a really massive scale in our health care system. And I think that’s not always obvious, because when you go to your doctor’s office, it really looks pretty similar to the way it did in probably 1985 or whatever. There are still fax machines, your doctor still has a pager. There’s all this stuff that doesn’t look very modern. But on the backend of the health care system, things are completely different.

So I think that on the backend, what we’re seeing is massive adoption of these algorithms for things like population health. So when a health system has a large population of patients that they’re responsible for, they need to make decisions at scale. So we can’t be back in this world of see one patient, treat them, release them, see the next patient. You have to be proactive. You have to figure out which one of your patients are, even if they look okay today, which ones are going to get sick, so that we can help them today.

So I think algorithms are really good at predicting stuff, like who’s going to get sick. And so I think that that use of algorithms actually sounds really good to me. We would like our health system to be more proactive, and to direct resources to people who need it to prevent chronic illnesses, to keep them out of the emergency department and out of the hospital. So that use case to me sounds really great and positive. I think the problem is that a lot of the algorithms that are being used for that thing have this huge bias that you pointed to.

JOEL BERVELL: And can you give us a snapshot of the impact machine learning has had on the delivery of health care since its introduction in this space? You gave us a great overview of understanding kind of the dangers and also how do we look at it? So how is it actually being used right now?

ZIAD OBERMEYER: Yeah, I can just tell you in the setting that we studied how our partner health system was using this kind of algorithm. So basically, they have a population of patients that they’re responsible for, like a primary care population. And three times a year, they would run this algorithm and that algorithm would generate a list and it would just be scoring people according to their risk of getting sick, basically, or who needed help today. And the top few percent, they were automatically enrolled in this extra help program, a high-risk care management program for the population health of the [unclear] out there. Those people automatically got in. The next half approximately were shown to their doctor. So the program, people asked the primary care doctors, “Here are some patients who might need help, they might not. We want you to decide.” So the doctor would check off who needs help and who doesn’t. And the bottom 50 percent just got screened out, so they never got considered for access to that program.

And so the algorithm was very concretely determining your level of access to this kind of VIP program for people who really needed help. And what we found was that effectively, because of the bias and the algorithm that we studied, that algorithm was effectively letting healthier white patients cut in line ahead of sicker Black patients.

So that was how it was used at this one system we studied. In the follow-up work we did, it was being used pretty similarly in lots of other systems. And just for context, this one algorithm that we studied by its own, the company that makes it, that by their estimates, it’s being used to screen 70 million people every year in this country. And that family of algorithms that works just like the one we studied, it’s 150 million people. So it’s like the majority of the U.S. population is being screened through one of these things that’s gating who gets access to help and who doesn’t.

JOEL BERVELL: Absolutely. And I think so many times in health care disparities, we think about the front end of it, whether it’s doctors or whether it’s patients not seeking care, or whether it’s access issues. But what you’re pointing to is this larger issue of systems that have been built in things that we’re not often looking at. I think it’s so key to emphasize that because everything goes into this. And if you focus on the front end, what looks like it is a problem, but you miss these backend things, then we don’t actually solve and help mitigate health care disparities.

ZIAD OBERMEYER: I like that way of looking at it. And I think the front end is very visible, and it attracts a lot of attention. And I think there’s been a lot of attention recently to, for example, the tools that doctors are using to decide on pulmonary function or kidney function, but the way those things are made on the backend is really, really important. And that’s where either you can do a lot of good by making those things work well and equitably or you can do a lot of harm.

JOEL BERVELL: Absolutely. One of our earlier episodes was actually about the GFR equation, glomerular filtration rate, and exactly that, how an algorithm can have this bias built into it without anyone knowing. And as I was going through medical school, I remember hearing about the GFR equation, not in my classroom, but from reading about it in medical kind of publishing that was coming out saying, “Why are we using this equation right now? Do people even know we’re using it?”

And I posted a video on TikTok, ended up getting over a million views. And the funny thing is half the people that were the people that were doctors and nurses and PAs were all saying, “I never even knew this existed.” So just to your point of it’s great to know the front end, but at the backend isn’t seen, we miss out on so much.

ZIAD OBERMEYER: Yeah, I think it’s great that people are starting to pay so much attention to these issues. And I think one of the things that at least I’ve learned over the past few years of working on this is that it’s just how complicated some of these issues are, and how much you really need to dive into the details.

I think where that’s taken me is that are some . . . I think there’s a very clear cut case to be made when we hard-code race adjustments into algorithms. I think that can be really harmful. So when we make an assumption that for example, oh, Black patients have lower pulmonary volumes or something like that, and we hard-code that in, that’s really bad.

On the other hand, I think there are some settings where you actually would really want to include race-based corrections. And let me give you a quickie. I’ll try this example out on you.

So this is from a paper I’m working on with two colleagues, Anna Zink and Emma Pearson. They’re both fantastic researchers who are really interested in fairness in medicine and elsewhere. So I want you to think about family history as a variable. And this is one of the variables we use to figure out who needs to be screened for cancer. And people who have a family history of, for example, breast cancer, like they’re at higher risk and so we want to screen them more. But if you think about what family history is, it’s something about history, it’s something about your family’s historical access to health care.

So now, if I told you here are two women, there’s one Black woman and there’s one white woman, and neither of them has a family history of breast cancer, you can feel better about that. For the white woman whose family has historically had a lot of access to care and if they had breast cancer, they’re likely to get diagnosed. But now for the Black woman, the fact that she doesn’t have a family history is a lot less meaningful, given that we’re not just dealing with the inequalities in medicine and the health care system today. Now, we’re dealing with all those inequalities over the past decades when they were much, much worse.

And so if an algorithm that’s being used to decide on cancer screening doesn’t know who’s Black and who’s white, it’s not going to know whether to take that family history seriously or less seriously. And so I think what I’ve learned just from doing some of this work is just how nuanced and complicated these things are. And sometimes, you don’t want a race adjustment. And other times, you really do want a race adjustment and you really have to dive into those details.

* * * * *

We’ll get right back to the interview. But first, I want to tell you about a podcast from our partners at STAT.

Racism in medicine is a national emergency. STAT’s podcast, Color Code, is raising the alarm. Hosted by award-winning journalist Nicholas St. Fleur, Color Code explores racial health inequities in America and highlights the doctors, researchers, and activists trying so hard to fix them. You can listen to the first eight-episode season of Color Code anywhere you get your podcasts. And stay tuned for season 2 coming later this spring.

* * * * *

JOEL BERVELL: So you’re a doctor by training, but you’re also a scientist, an associate professor, I’d venture to say a tech bro, because you kind of exist in this world too. You’re a cofounder of multiple companies, and you’re interested in open access to large data sets. I’m curious, when you first look at data sets, and maybe we can use the example of the first study I kind of laid out. What jumps out at you or what jumps out at you first, what are you looking for and how do you diagnose that bias, because it can be so hard to find.

ZIAD OBERMEYER: Yeah, absolutely. So I think the way I thought about the work that we were doing on this population health management algorithm originally was that when we looked at what these algorithms were doing, as we talked about, they were supposed to be predicting who’s going to get sick so we can help them today. So that’s what we thought they were doing and that’s how they were marketed and that’s how people used them. But what were they actually doing?

Well, actually there’s no variable in the data set that these algorithms learn from called “get sick.” And in fact, there’s no variable like that anywhere. There’s lots of different ways to get sick, that’s captured across lots of different variables, your blood pressure, your kidney function, how many hospitalizations you have, what medications you’re on . . . like, all that stuff. It’s complicated.

So instead of dealing with that whole complicated mess of medical data, what the algorithm developers do generally is they predict a very simple variable called “cost” — health care cost — as a proxy for all of that other stuff.

And now you don’t have to deal with all this other stuff, you can just use one variable. And that variable on its face is not that unreasonable because when people get sick, they generally do cost more money. And that happens for both Black patients and white patients. The problem is that it doesn’t happen the same for Black patients and white patients, and that’s where the bias comes from in this algorithm. But what we were interested in, that the team of people that worked on this originally, was that doesn’t seem like a very good way to train the algorithms. We don’t actually care how much people are going to cost. We care about who’s going to get sick and how we can help them. And we want to redirect resources not to people who are going to cost a lot of money, but to people who are going to get sick, where we can make an impact on their care and make them better.

So I think for me, and I think partly coming out of my experience as a doctor myself, I really am focused on how algorithms can help us make better decisions and closing the loop from the data to the things we do every day in the health care system, whether that says doctors or nurses or as people responsible for populations in a pop health division. And so trying to align the values of the algorithm with the values of our health care system, at least the ones we want, I think is where a lot of my work comes from.

JOEL BERVELL: I love that. And as you were talking, it reminded me of another one of you studies that I read. It was about the algorithm approach to reducing unexplained pain disparities in underserved populations. I’m hoping you can just give listeners a little bit about that research because I found it so fascinating when I read that.

ZIAD OBERMEYER: Oh, thank you. I think where that research started was a really puzzling finding in the literature that’s been known for a long time, which is that pain is just much more common in some populations than others. And so if you just look at surveys of have you been in pain, severe pain in the last couple of days, I think Black patients report that twice as often as white patients at the population level, which is really striking.

If you think of all of the inequalities in society that we know about, this is one that I think often isn’t mentioned or paid attention to, but it’s quite striking. To anyone who’s been in severe pain from back or knee pain or whatever, it’s really hard to live your life when you’re in severe pain. And that’s just much more common in some populations than others.

So I think there’s one explanation for this, which is I think everyone’s first thought, is like, well, yeah, we know that things like arthritis are more common in Black patients than white patients, or lower-socioeconomic-status patients than higher. But that turns out not to be the full story. So there are these studies that look at, they take patients who, for example, their knees look the same in the degree of arthritis they have, and then they ask the question, who has more pain? And it turns out even when you look at people whose knees appear the same to a radiologist, Black patients still report more pain.

So there’s this gap in pain between Black and white patients even when you’ve held constant what their knee looks like to a radiologist, which is kind of striking. So where’s that coming from? Well, that can be coming from lots of other things: in society, depression and anxiety. There are many other things that could explain that. But we had a different approach. So what we were interested in is, could an algorithm explain that by looking at their knees? Could an algorithm find something that human radiologists were missing, and as a result, explain that gap in pain between Black and white patients?

And so what we did is we trained an algorithm to look at the knee and answer a very simple question, which is: given these pixels in the picture of the knee, is this knee likely to be reported as painful or not? So we just trained an algorithm to basically listen to the patient and listen to their report of pain and link that back to the X-ray.

And so we found two things. One is that the algorithm did a better job than radiologists of explaining pain in everybody. So if you just looked at the variation in pain compared to the algorithm score versus the radiologist score, and by radiologist score, I mean there are these scoring systems for knee arthritis, which is the thing we were studying in this case, the Kellgren-Lawrence grade. And so when we just compared the algorithm to that, as written by the radiologist in their report, the algorithm did a better job for everybody.

But the algorithm did a particularly good job in explaining pain that was reported by Black patients but not explained by the radiologist’s report. So the algorithm was finding things that were in the knee that explained the pain of these Black patients more than white patients, and the radiologists were missing it.

I think that wasn’t, at least in retrospect, when you look back to those radiology scores, where did they come from? They were developed and validated in populations of coal miners in England in the 1940s and ’50s. Those patients were all white and male. And it’s not all that surprising that the science that was developed in that particular time and place isn’t the same science that we need for the kinds of patients who see doctors today. And so I think that for me highlighted the fact that just like algorithms can do a lot of terrible things and scale up and automate racism and inequality, they can also do a lot of really useful things and they can undo some of the biases that are built into the DNA of medical knowledge.

JOEL BERVELL: I’m so glad you kind of ended with that. Because it leads perfectly into my next question, which is how do we flip that script, so to speak? We’ve heard so much about AI reinforcing bias, but how can AI mine out that bias and create more equitable health care spaces?

ZIAD OBERMEYER: I think I loved, just to go back to the way you initially framed it . . . Algorithms do learn from history. There’s nothing we can do about that. And that’s where algorithms’ power comes from. We’ve accumulated so much data in our electronic medical records, in our insurance claims, in lots of other parts of society, and that’s really powerful. But if we aren’t super careful in what lessons we learn from that history, we’re going to teach algorithms those bad lessons too.

And so, when we teach an algorithm to find people who are going to get sick, we cannot measure sickness with cost because when we do, we bake in all of those inequalities. Whereas if we find different measures of sickness . . . among people who got their blood pressure taken, what was their blood pressure? Among people who got tested for something, what did the test show?

So by being really, really careful about how we train those algorithms and training them on measures that are about patients and about what happens to them and their outcomes, rather than about doctors and the health care system and what the care that people get. By reframing it around what care do people need, not what care do people get, I think we can train algorithms that are much, much more just and equitable, and that’ll undo some of these biases in the history so that we can have a better future.

JOEL BERVELL: Yeah. So what is the near-term and long-term potential you see for ensuring greater equity in patient care, given that the incumbent systems are built so differently? You’ve eloquently highlighted kind of the need for how do we change these, but where can we go? What’s the potential for it?

ZIAD OBERMEYER: I think right now, it’s really, really hard to build algorithms and it’s really, really hard to audit algorithms to check how they’re doing because it’s so hard to get data. So as you mentioned, there are a couple organizations that I’ve cofounded that try to make it easier for people to do these things.

And so one of them is a nonprofit, it’s called Nightingale Open Science. It’s a philanthropically funded entity that works with health systems to produce interesting data sets that can promote AI research that solves genuine medical problems in a way that’s equitable and fair. And so I think giving researchers access to those data is really important. I think it’s also really important to give people who want to build AI products access to those data too. So the other organization I cofounded is a for-profit company called Dandelion, and that aims to make data available to people who want to develop algorithms.

We’re also offering a service, or we’ll soon be offering a service where anyone who’s developed an algorithm can, for free, give us access to that algorithm in a way that lets us run the algorithm on our data and generate performance metrics overall, but also broken out by different racial groups, by geography, by whether someone’s in a fee-for-service environment or a value-based environment.

So understanding how algorithms work in these different populations is super, super important. It’s not something that’s easy to do now, but it’s something that I’m very committed to. And so it’s something we’re going to be doing through that company as almost like a public service.

JOEL BERVELL: Yeah. That’s so incredible, and I love that your company’s doing that to see how does it work in the real world? How does it actually going to affect people? And do you think it’s a people . . . is it a people problem that is the reason why we’re here? Is it a hiring problem? Where does this issue come from?

ZIAD OBERMEYER: I mean, I think we have a lot of strides to make in terms of increasing diversity and lots of different ways of diversity among people who have access to data, who can build algorithms and things like that. And I think the more we don’t do that, the more we’re going to get unequal outcomes.

I think though that when I think about the, at least going back to our population health example, even though that algorithm was developed by a company, the people that I talked to at that company, maybe they were just really good actors, but they seemed like really good people who wanted to do the right thing. And when we communicated what we found to them, they were genuinely motivated to fix it. On the other hand, when you look at who was buying and applying that algorithm to their populations, these are people working in population health management with deep commitments to equity and social justice. They are also really good people, but they were using and applying a biased tool.

And so I think for me, this is all fairly new. We haven’t had algorithms for that long. We as a society and as scientists are just starting to figure out where the bias comes from, how to get it out, what to do about these things. So I think we’re all in this together. We’re all trying to learn. And I think almost everyone’s trying to do the right thing.

And so I think giving people access to data so that they can build and validate and audit algorithms is super important. And setting up incentive structures that do that is really, really important. And then elevating the work that is trying to really speak to things that improve patient outcomes and that improve equity is really important. And it’s one of the reasons I’m so glad to be here today.

JOEL BERVELL: So I mean, I really want to get to understanding some of the health systems that you’ve worked with. And I’m hoping, can you take us to a health care system or hospital that you’ve either worked with or you’ve seen where this improved AI approach has worked for them, that’s worked in practice compared to traditional peer institutions?

ZIAD OBERMEYER: Yeah, I can talk about some general types of people that we’ve worked with. So we were very lucky to get a lot of publicity for some of the work that we did. And that generated a lot of people reaching out to us to try to figure out if some of the algorithms that they were building or using or thinking about purchasing were biased.

And so we worked with a number of health insurance companies or government-based insurers who were using population health management algorithms, very similar to the one that we studied. And what we found is that, unsurprisingly, the algorithms that were predicting health care costs in those settings were all biased. But optimistically, building on some of the work that we did and described in that original paper in Science, I think applying those fixes worked in those settings too, or what we would expect them to work in those settings as well.

JOEL BERVELL: Yeah. I was going to ask, what are some of the challenges that implementation? And were there any surprises? You mentioned the unsurprising, but were there any surprises there?

ZIAD OBERMEYER: I think some of the surprises that came out of that work were related to how little oversight there is right now in organizations that are using algorithms. So I think what we saw was that in lots of different parts of the organization, at lots of different levels, people were just kind of building or buying or pushing out these algorithms without a lot of evaluation or even just forethought beforehand.

And so even though these algorithms, the whole point is to affect decision-making at huge scale for tens of thousands, hundreds of thousands, millions of patients, I think the level of oversight was just shockingly little. And in fact, most organizations didn’t even have a list of all the algorithms that were being used in that organization. So it was really hard to have any oversight over these very powerful tools, if you don’t even know what’s being used.

In parallel, there’s no person who’s responsible. And that turns out . . . I think anyone who’s been following the Silicon Valley Bank story knows that part of the story is there was no chief risk officer for, whatever, the past eight months. And I think that in every health care system, that none of them for the past years have had a chief anything officer with a mandate to look at the risks and the benefits of algorithms that are being used in those massive companies.

So I think there’s also this organizational failure in terms of putting someone in charge and making their job descriptions say that you are responsible for the good things that you can do with algorithms, but also the bad things that happen. That’s on you. And until there’s that kind of responsibility in organisms, there can be no accountability because who’s accountable?

JOEL BERVELL: Is there resistance to that kind of innovation that you’re doing? You’re coming into these places and saying, “Hey guys, you’re doing this wrong.” Or the things that you think are helping people out may be hurting them. Has there been resistance to that kind of innovation of relooking at and rethinking and reframing how we think about algorithms impacting these hundreds of thousands to millions of people every single day?

ZIAD OBERMEYER: I mean, I don’t think there’s any resistance in principle, but unfortunately, most organizations don’t work in principle. They work in practice. And in practice, one of the reactions we got . . . Our suggestion was: make a list. Just figure out what is being used in your organization and then figure out, “Okay, is it any good if we run the prediction and just look at how it’s doing on the thing that it’s supposed to be doing? Is it good? Is it good for people overall? Is it good for the groups that you care about protecting? Let’s just generate those numbers for all the algorithms being used,” and then put someone in charge of algorithms. Especially the first part of getting the list and generating the metrics, the reaction we got at a hundred percent of the places we talked to was like, “Wow, that sounds like a lot of work.” And it’s like, “Yeah, that’s right.”

JOEL BERVELL: Yeah.

ZIAD OBERMEYER: But these things are being used to affect decisions for a lot of people. And so yeah, it probably should be a lot of work to figure out if they’re doing a good job. So I think within organizations, I think there is movement, and I think that’s positive. And I think that’s helped by the fact that there are also a lot of people in law enforcement who are now looking at specific cases of algorithms that are biased. And so I unfortunately can’t talk about some of that work that I’m also doing, but I think that’ll also be a really valuable compliment to what people are motivated to do internally, but sometimes can’t advocate for those resources to be allocated.

JOEL BERVELL: Yeah. I’m literally getting chills talking to you, just because the work you’re doing is that important. I think the research you’re doing is changing the way that we understand how do we approach disparities, but also how do we approach just these algorithms that are affecting everyone today? And I have to ask as a last question, where’s your research heading? And what next problems are in queue for you? Because I think you’re already doing incredible things, and I’m sure there’s so much you can’t talk about, but what can we know about what’s coming next?

ZIAD OBERMEYER: Thanks for asking and thank you for all the kind words. One of the things that I’m working on now that I’m really excited about that’s very related to what we’ve been talking about is a couple years ago, one of my coauthors and I published a paper that is a machine learning algorithm that helps emergency physicians like myself risk-stratify patients in the emergency department for acute coronary syndrome.

So we wrote that paper, it looks good on paper, we validated it in one hospital’s electronic health record data. Retrospectively, we validated in Medicare claims across the country. So that’s all well and good, and that produced a paper. But, what’s next? This is something that has the potential to affect a life-and-death decision for people. So how do you evaluate that? How do you make sure it’s doing a good job?

And I think for those kinds of algorithms, you can’t just write the paper that shows it looks good over the past few years and you should implement it. So we’re working with a large health system called Providence on the West Coast, which has lots and lots of hospitals, a great system, lots of great data. And we’re actually implementing that algorithm as a randomized trial. So we’re rebuilding the algorithm right now inside of their data infrastructure, and then we’re going to roll it out in a randomized way across some of their hospitals. And we’re just going to see if the effects that we saw on paper actually hold up in real life, if doctors use it, if they find value in it, if it actually catches all the cases of missed heart attacks that we think are currently going through these emergency departments and are not being caught.

So I think that kind of rigorous evaluation, it’s something we insist on for drugs, but right now we don’t insist on at all for algorithms. And I think especially for these very important consequential algorithms, that standard of evidence is really important.

JOEL BERVELL: Funny enough, I’m very familiar with Providence. I’m from the West Coast and actually worked at Providence for a year in clinical research, and that’s where my med school does our rotations too.

ZIAD OBERMEYER: All right.

JOEL BERVELL: So lots of connections.

ZIAD OBERMEYER: Well, maybe we’ll run into each other in Oregon or Washington or California or Alaska.

JOEL BERVELL: Hopefully. Fingers crossed. Well, Dr. Obermeyer, thank you so much for your time and for the work you’re doing. Like I said before, you are changing the game. You’re looking at places that haven’t been looked at before, uncovering things that we don’t even realize are impacting patients all over. And thank you so much for being here.

ZIAD OBERMEYER: Thank you so much for having me on the show.

JOEL BERVELL: This episode of The Dose was produced by Jody Becker, Mickey Capper, and Naomi Leibowitz. Special thanks to Barry Scholl for editing, Jen Wilson and Rose Wong for art and design, and Paul Frame for web support. Our theme music is “Arizona Moon” by Blue Dot Sessions. If you want to check us out online, visit thedose.show. There you’ll be able to learn more about today’s episode and explore other resources. That’s it for The Dose. I’m Joel Bervell, and thank you for listening.